Automatic message extraction with AI

As your project grows, the addition of multi-language support is often postponed, with more code accumulating daily through new features. Product managers push for translations, but the task of extracting messages from the source code becomes increasingly time-consuming. How about using AI to simplify this process? 😏

In this article, I’ll introduce a straightforward command-line app written in TypeScript—a concept I considered ever since first learning about OpenAI. The idea is simple: use AI to batch-process source code and implement something practical.

I finally decided to give it a shot, and I've built a simple CLI that:

- scans the source code files one by one in a specified directory,

- extracts messages from the source code files,

- generates diffs to facilitate multi-language (i18n) support, and

- applies these diffs to the source files.

Let’s dive into how it works!

i18n-wizard

i18n-wizard is a lightweight CLI that extracts inline texts and generates relevant translation keys. It can also “convert” inline strings to translation keys for easy integration with your preferred i18n library. App available for free via npx. Under the hood, the CLI is using OpenAI to generate diffs, enabling it to insert libraries, rename variables, or make other adjustments to the source code without the need for a specialized parser.

Automatic message extraction

The CLI’s primary feature is to extract messages from the source code files. There are many alternatives to extract messages from the source code, but they are usually bound with the specific framework or language. Using AI makes it vendor-agnostic, so the CLI doesn't have to implement any specific parser and keep it up to date for every language or framework.

Automatic library insertion

Another feature is generating diffs for source files, allowing you to relocate inline strings to your choice of i18n library. Same as with the message extraction, the CLI is using OpenAI to generate diffs, so it can be used to insert libraries, change variable names, or do any other changes to the source code, without the need to implement a specific parser for every language or framework.

Though still in its early stages, the app may occasionally generate invalid diffs, so manually review and test a small subset before running it across your project. Although it's not perfect, it can help you to speed up the process of adding multi-language support to your project.

How to use i18n-wizard

Before running the CLI on your entire project:

- test on a small subset of files to see if the diffs are generated correctly,

- ensure you have a source code backup since the CLI may alter files unexpectedly,

- don't keep any sensitive information in the source code files, as they will be sent to OpenAI.

Prepare a prompt file

We have to start with telling AI what we want to achieve. CLI is prepared for using custom prompts, so you can adjust it to your needs. This part is crucial, as the quality of diffs depends on the quality of the prompt.

Create a prompt.txt file; as a starting point you can use the following prompt:

You are a software developer working on a project that needs to be translated into multiple languages.

You have been tasked with extracting all the texts that need to be translated from the source code.

Find all plain texts in the source code that should be translated and think of a proper names for found translation keys.

Return all translation keys with messages as a valid JSON that looks like this: {"d": "", "e": [{"k": "translation key", "m": "text"}]},

where "e" has a list with your translation keys and found texts. "k" is your translation key and "m" is the original text from the source code.

If there is nothing to translate, you can respond with an empty diff patch and an empty list of extracted translations.

Here is the source code you need to analyze:

{__fileContent__}

You can be more specific what language or framework you are using, so AI can generate better diffs.

Do not remove the {__fileContent__} placeholder, as it will be replaced with the actual content of the file during the process. You can also include {__filePath__} placeholder if you need it.

Prepare CLI command

To start the process, you need to run:

npx @simplelocalize/i18n-wizard ./my-directory/**/*.{tsx,ts}

But before we do that, set additional parameters as necessary:

--prompt- path to the prompt file, by default it's./prompt.txt,--output- path to the output file, by default it's./extraction.json,--openAiKey- OpenAI API key, you can also set it as an environment variable (OPENAI_API_KEY),--openAiModel- OpenAI model, default isgpt-4.1,--extractMessages- extract messages from the source code, by default it'sfalse,--generateDiff- generate a diff file with changes made by the CLI, by default it'sfalse,--applyDiff- apply the diff file to the source code, by default it'sfalse.

In the following examples I'm going to show you how to use the CLI with different options. I've used environment variables to set the OpenAI API key for simplicity.

Running the CLI without any options only shows which files are going to be processed.

Sample TSX file with React component

As an example, I'm going to use a simple React component that contains some text that needs to be translated. It's a footer component that contains links to help, contact, support, privacy policy, and terms of service pages.

import * as React from "react";

const Footer = () => {

return (

<footer className="footer-landing no-print">

<div className="container">

<div className="footer-landing__content">

<nav>

<img

src="/render-form.svg"

width="45"

height="45"

className="navbar-brand"

alt="RenderForm"

/>

<ul>

<li>

<a href="/docs/">Help</a>

</li>

<li>

<a href="mailto:contact@renderform.io">Contact</a>

</li>

<li>

<a href="/docs/general/support/">Support</a>

</li>

<li>

<a href="/legal/privacy-policy/">Privacy Policy</a>

</li>

<li>

<a href="/legal/terms-of-service/">Terms of Service</a>

</li>

</ul>

</nav>

</div>

</div>

</footer>

);

};

export default Footer;

Run only message extraction

To extract messages from the source code, you can use the following command:

npx @simplelocalize/i18n-wizard --extractMessages ./my-directory/**/*.{tsx,ts}

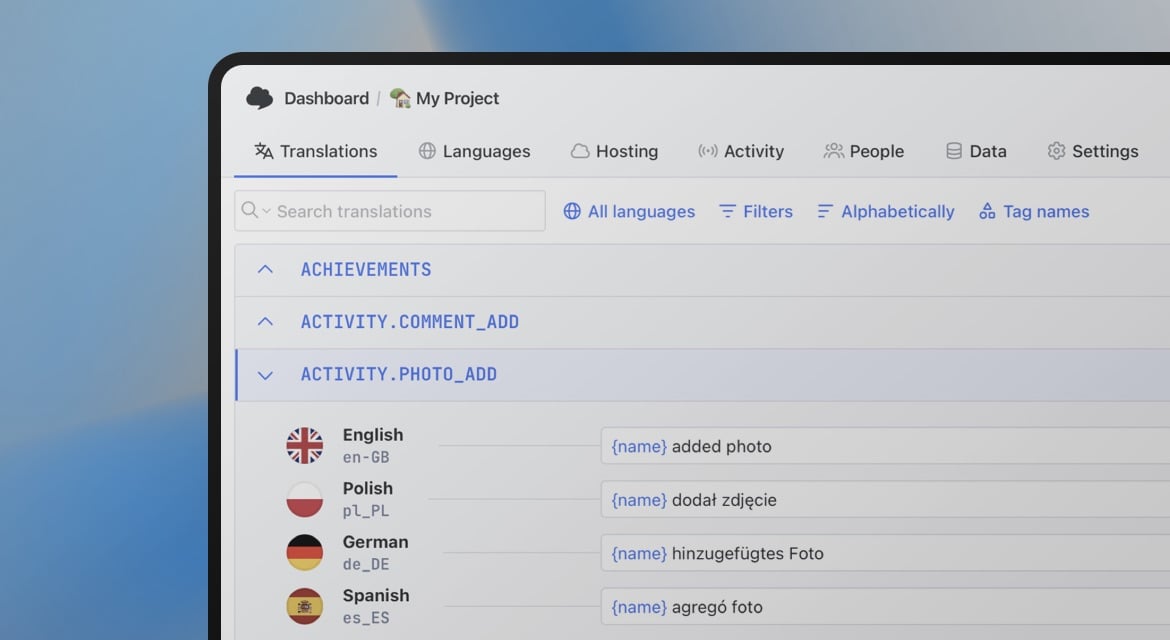

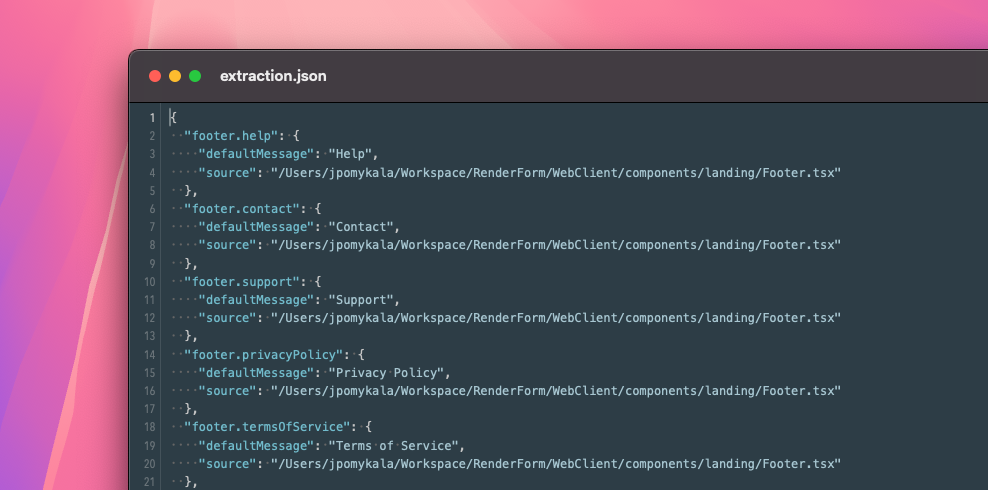

CLI resulted with the following output in extraction.json. The output file follows SimpleLocalize JSON format so it can be

imported to SimpleLocalize without any changes.

{

"footer.help": {

"defaultMessage": "Help",

"source": "/Users/jpomykala/Workspace/RenderForm/WebClient/components/landing/Footer.tsx"

},

"footer.contact": {

"defaultMessage": "Contact",

"source": "/Users/jpomykala/Workspace/RenderForm/WebClient/components/landing/Footer.tsx"

},

"footer.support": {

"defaultMessage": "Support",

"source": "/Users/jpomykala/Workspace/RenderForm/WebClient/components/landing/Footer.tsx"

}

}

The quality of messages depends on the prompt file, so you might need to adjust it to get better results, and AI can generate different keys, and message

between runs. In the examples, below I've also used gp-3.5-turbo model, which is faster but might generate less accurate results. However, in my case, it worked very good (even better than GPT-4).

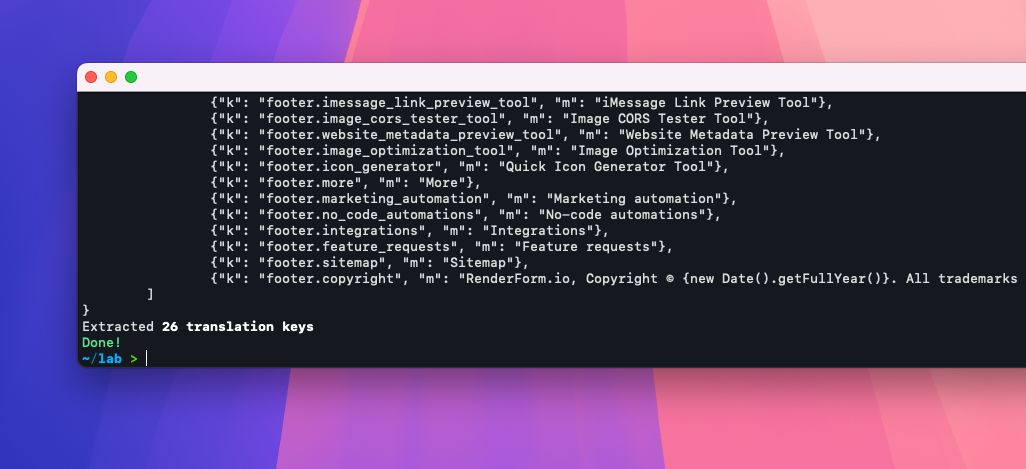

Run message extraction and diff generation

To extract messages and generate diffs for the source code files, you can use the following command:

npx @simplelocalize/i18n-wizard --extractMessages --generateDiff ./my-directory/**/*.{tsx,ts}

Diffs generated by the OpenAI might not be valid, so you will need to review them and fix them manually before applying them to the source code. Otherwise, the CLI will fail to apply them.

Apply diffs to the source code

Once you generate diffs, you can apply them by enabling the --applyDiff option:

npx @simplelocalize/i18n-wizard --applyDiff ./my-directory/**/*.{tsx,ts}

If the CLI fail to apply the diff, it will skip the file and move to the next one with printing the error message. Correctly applied diffs will be automatically deleted, so you can review any issues and run the CLI again.

Conclusions

Using AI to automate message extraction from the source code is a great way to speed up the process of adding multi-language support to your project. Although the CLI is not perfect, it can help you to move inline strings to the i18n library of your choice, reducing the amount of manual work needed. Creating a good prompt file is crucial to get better results. Unfortunately in many cases the diffs generated by the AI are not valid, but adjusting the prompt may still reduce the amount of manual work needed to move inline strings to the i18n library of your choice. It's a great way to start the localization process in projects that have never been localized before, or in projects that have a lot of text that needs to be translated, but it's not a silver bullet.

i18n-wizard CLI is open-source, so you can review the code and contribute to it if you think you can improve it. I encourage you to try it and let me know what you think about it!

Known issues

OpenAI charges much more for output tokens than for input tokens, so running this CLI on large codebases may become costly. To optimize that, I decided to ask AI to generate diffs instead of generating the whole file content. Unfortunately, it generates invalid diff files very often, which prevents the CLI from applying the changes to the source code.

Contributing

Feel free to fork the repository, customize it to your needs, or submit PRs for new features! If you have any questions or need help, feel free to reach out to me via email.